Using AI to classify data from solar images

New research describes how scientists from the University of Graz, the Kanzelhöhe Solar Observatory (Austria), and Skolkovo Institute of Science and Technology (Skoltech) have used artificial intelligence to develop a method to classify and quantify data from solar images. Their findings are reported in the journal Astronomy & Astrophysics.

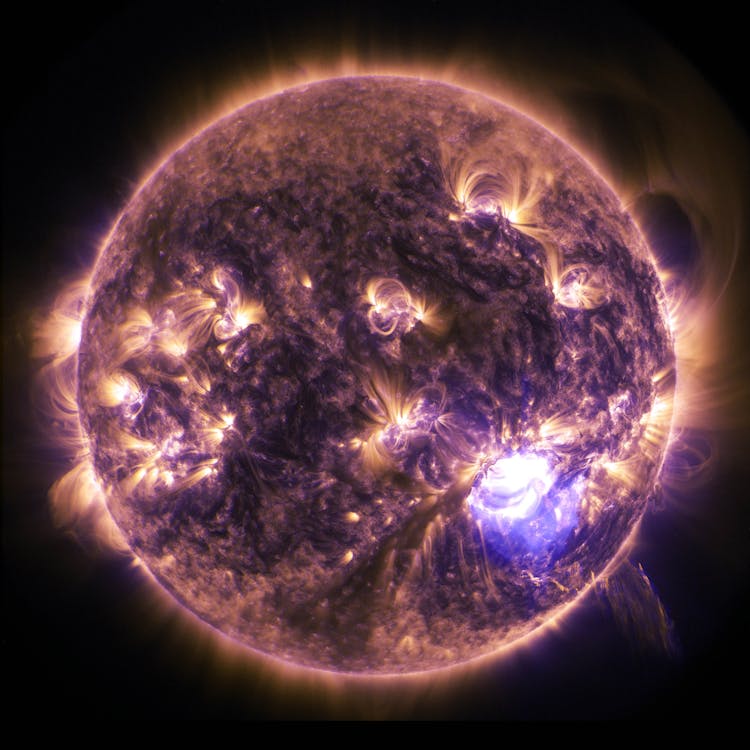

Given the recent boom in the field of solar physics in the last decades, scientists are no longer able to keep up with manually sorting the data collected from ground- and space-based observatories. These telescopes provide continuous monitoring of the Sun without regard to daylight or local weather conditions. However, the images produced are often obscured by clouds and air fluctuations in the Earth's atmosphere, so in order to get accurate images, trained scientists must typically spend tedious hours conducting image quality assessments.

"As humans, we assess the quality of a real image by comparing it to an ideal reference image of the Sun. For instance, an image with a cloud in front of the solar disk – a major deviation from our imaginary perfect image – would be tagged as a very low-quality image, while minor fluctuations are not that critical when it comes to quality. Conventional quality metrics struggle to provide a quality score independent of solar features and typically do not account for clouds," explains co-author Tatiana Podladchikova, who is an assistant professor at the Skoltech Space Center (SSC).

To address this problem, the research team used deep learning to identify solar images with stable image quality. They employed a neural network based on Generative Adversarial Networks (GAN) in order to learn the features of high-quality images, which allows for an estimation of the deviation of real observations from an ideal reference. The authors write:

“We use adversarial training to recover truncated information based on the high-quality image distribution. When images of reduced quality are transformed, the reconstruction of unknown features (e.g., clouds, contrails, partial occultation) shows deviations from the original. This difference is used to quantify the quality of the observations and to identify the affected regions.”

Firth author Robert Jarolim, a research scientist at the University of Graz, explains, "In our study, we applied the method to observations from the Kanzelhöhe Observatory for Solar and Environmental Research and showed that it agrees with human observations in 98.5% of cases. From the application to unfiltered full observing days, we found that the neural network correctly identifies all strong quality degradations and allows us to select the best images, which results in a more reliable observation series. This is also important for future network telescopes, where observations from multiple sites need to be filtered and combined in real-time."

The team plans to continue improving their technique in order to enhance the continuous tracking of solar activity in the field of solar physics.

Sources: Astronomy & Astrophysics, Eureka Alert